Project Category: Software

Join our presentation

About our project

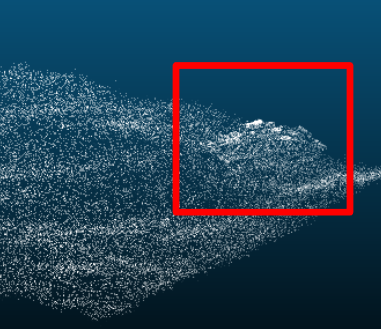

Military surveillance and worksite management (such as oil sands and mining operations) require large-scale geographic awareness of vehicles, structures, and resources. Mapping these changes over time is both time-consuming and challenging to automate so that it can be applied to a variety of use cases. Our project provides an approach to extend the application of Lockheed Martin CDL’s 3D real-time drone mapping software to identify and classify 3D changes over time. We developed our solution to provide the ability to configure the application to variable industry classification requirements.

Our Team Members

Cameron Edwards [Electrical]: https://www.linkedin.com/in/cameron-edwards-b80a12158/

Jordon Cheung [Software]: https://www.linkedin.com/in/jordon-cheung99/

Derek Braun [Software]: https://www.linkedin.com/in/derek-braun/

Justin Zhou [Electrical]: https://www.linkedin.com/in/thejustinzhou/

Olivia Ko-Wilson [Software]

Thomas Galesloot [Software]: https://www.linkedin.com/in/thomas-galesloot-6958a6236/

Details about our design

HOW OUR DESIGN ADDRESSES PRACTICAL ISSUES

Two Dimensional Versus Three Dimensional Change Detection

Two-dimensional change detection is typically performed on landscapes through the utilization of top-down images, eliminating the height dimension. The disadvantages of this approach are that objects or changes of interest can sometimes be obscured by any material at a higher elevation, such as clouds or smoke. Additionally, movement of dirt, vegetation, and other poorly-defined geometry is difficult to quantify in terms of the area and volume of change. Three-dimensional change detection makes use of point cloud data representation to provide more accurate and efficient identification of changes in a way that is not hindered by the two-dimensional issues.

Automatic Change Detection

Having humans determine the changes between two point cloud scans of an area based on visual differences is:

- Time-consuming

- Error-prone

- Costly

Based on our preliminary research into 3D change detection, purely algorithmic approaches to determining areas of change between point cloud scans typically rely on user-selected thresholds for accuracy and are generally susceptible to noise in the point cloud scans.

Our solution addresses these issues through the use of neural networks in order to predict changed areas between two point cloud scans of the same terrain. This allows us to avoid the issues commonly associated with a more algorithmic approach to 3D change detection.

Data Storage

Typical change detection solutions are required to store large amounts of data in the form of point cloud data or high-resolution images. This storage requirement is expensive and limits the portability of simple solutions. Our solution identifies changes automatically and quickly, while producing a stripped down output data in csv format.

Training Data

Collecting a suitable amount of point cloud data to effectively train neural network(s) for change detection would be:

- Costly

- Time-consuming

- Impractical to cover all possible scenarios

Our solution also addresses these issues as we have allocated a significant amount of the effort for our project into creating a method to procedurally generate artificial point cloud terrain data for training our neural network(s).

WHAT MAKES OUR DESIGN INNOVATIVE

From our rather comprehensive initial research, we found that there are a few published works for 3D change detection. These publications rely on purely algorithmic thresholding techniques to identify areas of change. These methods are effective for niche use cases, such as urban planning and long-term change analysis, however are not well suited to address areas with frequent changes, such as outdoor industry work sites.

Our project provides an innovative solution to this problem by leveraging a custom machine learning pipeline to create a robust and configurable method for identifying and classifying industry-specific changes. Part of the reason why there is no published literature for machine learning-assisted 3D change detection is that it is difficult to generate a sufficient amount of point cloud data with ground truth values. We spent a significant amount of effort creating an effective method for procedurally generating large quantities of labelled training data for our models.

A source of inspiration for our machine learning approach was sourced from papers documenting machine learning-assisted approaches to 2D change detection which use satellite imagery as input data. Our system has the added dimensionality of elevation to more accurately assess work-site objects and terrain changes that would not be apparent in 2D approaches.

WHAT MAKES OUR DESIGN SOLUTION EFFECTIVE

Our solution provides a novel approach to addressing the problem of 3D change detection, while also allowing for end-to-end customization to target specific industry applications. Most of the published papers documenting solutions for change detection make significant trade-offs in the types of changes being detected, such as configuring the algorithm to only handle large-scale buildings and construction.

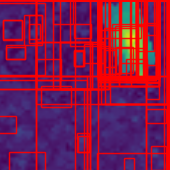

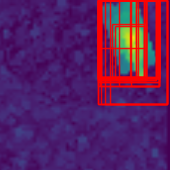

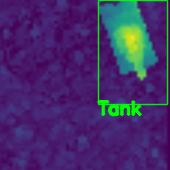

The solution leverages a custom pipeline of neural networks to locate and classify changes between two point cloud scans of a worksite. Our model identifies areas of interest that are then binarily classified as changed or unchanged and then fed forward to our classification model to label the changes.

HOW WE VALIDATED OUR DESIGN SOLUTION

Our project consists of two primary components:

- Training data generation

- Neural network pipeline.

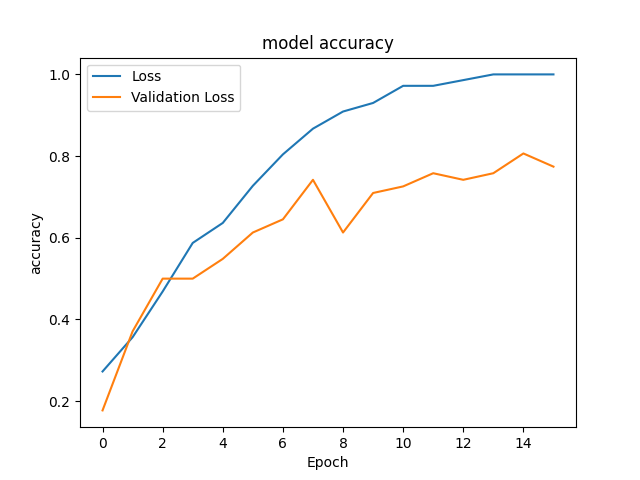

Our validation process was split to accommodate this separation. The neural network pipeline was validated through the use of built-in accuracy metrics from the TensorFlow library. These accuracy metrics are computed through the comparison of predicted labels from the trained neural networks against the “true” labels generated as a component of our training data generation pipeline. The training data generation was more difficult to validate do to the innovative nature of our approach. For this part, we attempted to iteratively reach a state where point cloud density and logical arrangement of the map was observationally correct and could accommodate new additions without causing our previous classifications to fail.

FEASIBILITY OF OUR DESIGN SOLUTION

The solution we developed was intended to be a proof of concept implementation using our novel methodology; in this regard, it is rather comprehensive. While the core pipeline and logic is well established, there would need to be more tuning and refinement before this solution could be used in an industry setting. The results of the project are quite promising and suggest that further development to include our algorithms in a distributed software solution would provide a feasible method for accurate automated change detection. Additionally the method performs well in a way which could be configured to specific industry use-cases.

An interesting area for further development would be fusing and filtering our results with a 2D RGB image-based change detection method to improve combined accuracy.

Partners and Mentors

We want to thank everyone who has provided their assistance and guidance throughout this capstone project. In particular, we would like to thank Daniel Ferguson from Lockheed Martin CDL, Dr. Mahmood Moussavi for being our technical advisor, and Shoaib Hussain, our TA.

Our photo gallery

References

- K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv.org, 10-Apr-2015. [Online]. Available: https://arxiv.org/abs/1409.1556. [Accessed: 01-Feb-2022].

- J. R. Uijlings, K. E. van de Sande, T. Gevers, and A. W. Smeulders, “Selective search for object recognition,” International Journal of Computer Vision, vol. 104, no. 2, pp. 154–171, 2013.

- R. Qin, J. Tian, and P. Reinartz, “3D change detection – approaches and applications,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 122, pp. 41–56, Dec. 2016.

- He, Kaiming, et al. “Mask R-CNN.” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 2, 2020, pp. 386–397.

- Revision, Andy Jahn. “Chapter 3: Coregistration.” Chapter 3: Coregistration – Andy’s Brain Book 1.0 Documentation, 2019, https://andysbrainbook.readthedocs.io/en/latest/SPM/SPM_Short_Course/SPM_04_Preprocessing/03_SPM_Coregistration.html

- Ayman, Habib & Mwafag, Ghanma & Mitishita, Edson. (2004). Co-registration of photogrammetric and LIDAR data: methodology and case study. Revista Brasileira de Cartografia. 56.

- J. Tian, P. Reinartz, et. al, “Region-based automatic building and forest change detection on Cartosat-1 stereo imagery”, ISPRS – International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, January, 2011.

- M. Hasanlou and S. T. Seydi, “Sensitivity Analysis on Performance of Different Unsupervised Threshold Selection Methods in Hyperspectral Change Detection,” 2018 10th IAPR Workshop on Pattern Recognition in Remote Sensing (PRRS), 2018, pp. 1-4, doi: 10.1109/PRRS.2018.8486355.

- J. Tian, P. Reinartz, P. d’Angelo, and M. Ehlers, “Region-based automatic building and forest change detection on cartosat-1 stereo imagery,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 79, pp. 226–239, May 2013.

- S. J. Lee, Y. S. Moon, N. Y. Ko, H. Choi and J. Lee, “A method for object detection using point cloud measurement in the sea environment,” 2017 IEEE Underwater Technology (UT), 2017, pp. 1-4, doi: 10.1109/UT.2017.7890290.

- Zhiding Yu, Chunjing Xu, Jianzhuang Liu, Oscar C. Au, and Xiaoou Tang. 2011. Automatic object segmentation from large scale 3D urban point clouds through manifold embedded mode seeking. In Proceedings of the 19th ACM international conference on Multimedia (MM ’11). Association for Computing Machinery, New York, NY, USA, 1297–1300. DOI:https://doi.org/10.1145/2072298.2071998